# Concurrency

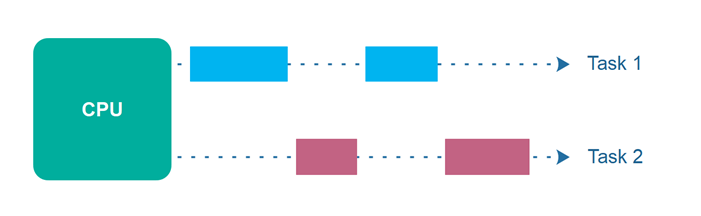

Concurrency means that an application is making progress on more than one task - seemingly at the same time (concurrently).

A single CPU cannot make progress on more than one task exactly at the same time. However, more than one task is in progress at the same time inside the application/process. To make progress on more than one task concurrently, the CPU switches between the different tasks during execution.

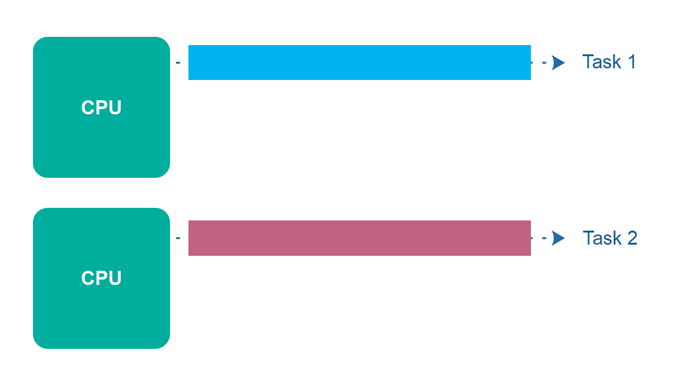

# Parallel Execution

Parallel execution is when a computer has more than one CPU or CPU cores and makes progress on more than one task simultaneously. Parallel execution is not the same thing as parallelism.

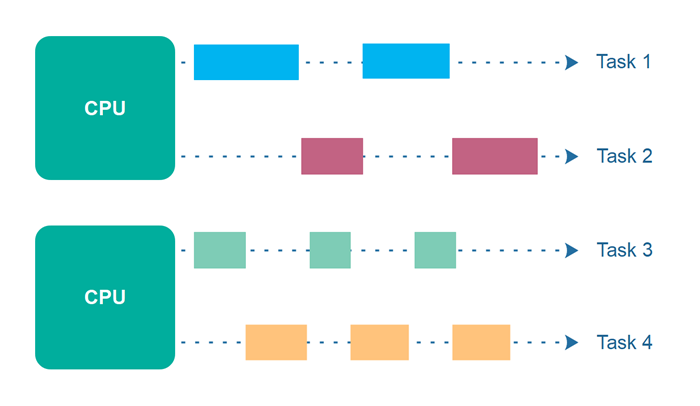

# Parallel Concurrent Execution

Parallel concurrent execution is possible when threads are distributed among multiple CPUs. Threads executed on the same CPU are executed concurrently and the threads executed on different CPUs are executed in parallel.

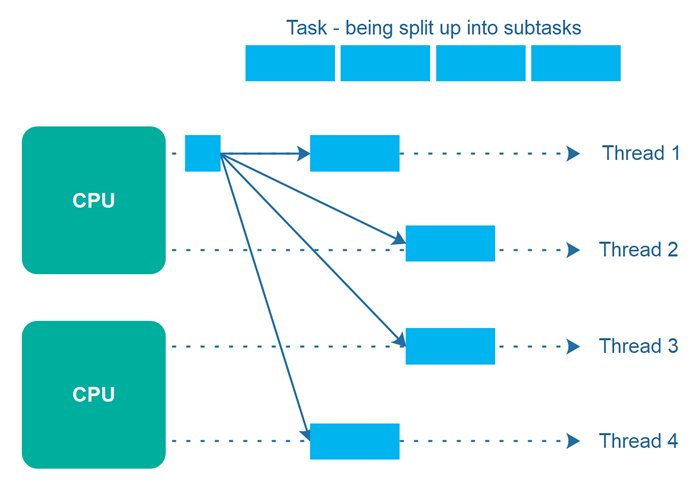

# Parallelism

Parallelism means that an application splits its tasks into smaller subtasks that can be processed in parallel on multiple CPUs at the same time. Therefore, parallelism does not refer to the same execution model as parallel concurrent execution, even if they may look similar on the surface.

If instead the 4 subtasks were executed by 4 threads running on each their own CPU (4 CPUs in total), then the task execution would have been fully parallel. However, it is not always easy to break a task into exactly as many subtasks as the number of CPUs available. Often, it is easier to break a task into a number of subtasks which fit naturally with the task at hand, and then let the thread scheduler take care of distributing the threads among the available CPUs.