Concurrency systems can be implemented using different concurrency models. A concurrency model specifies how threads in the system collaborate to complete the tasks they are given. Different concurrency models split the tasks in different ways, and the threads may communicate and collaborate in different ways.

## Concurrency Models And Distributed System Similarities

In a concurrent system different threads communicate with each other. In a distributed system different processes communicate with each other (possibly on different computers). Threads and processes are quite similar to each other in nature. That is why different concurrency models often look similar to different distributed system architectures.

Distributed systems have the extra challenge that the network may fail, or a remote computer or process is down etc. However, a concurrent system running on a big server may experience similar problems if a CPU fails, a network card fails, a disk fails, etc. The probability of failure may be lower, but it can theoretically still happen.

Because of the similarities between concurrency models and distributed system architectures, they often share ideas from each other. For instance, models for distributing work among worker threads are often similar to models of load balancing in distributed systems. The same is true of error handling techniques like logging, fail-ver, idempotency of tasks etc.

## Shared State VS Separate State

### Shared State

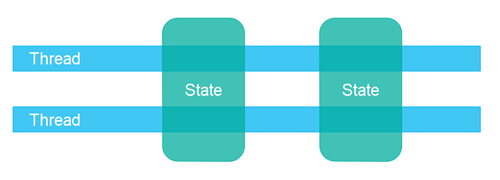

An important aspect of a concurrency model is whether the components and threads are designed to share state among the threads, or to have separate state which is never shared among the threads.

Shared state means that the different threads in the system will share some state (data, typically one or more objects) among them. When threads share state, problems like **race conditions** and **deadlock** etc. may occur. It depends on how the threads use and access the shared objects.

### Separate State

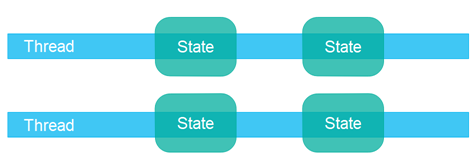

Separate state means that the different threads in the system do not share any state among them. Communication between threads is achieved through the exchanging of immutable objects among them, or by sending copies of objects (data) among them. When no two threads write to the same object (data / state), you can avoid most of the common concurrency problems.

## Parallel Workers Concurrency Model

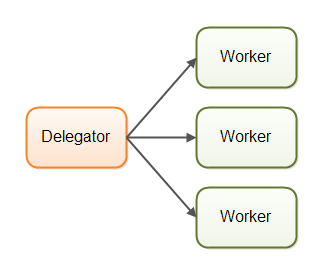

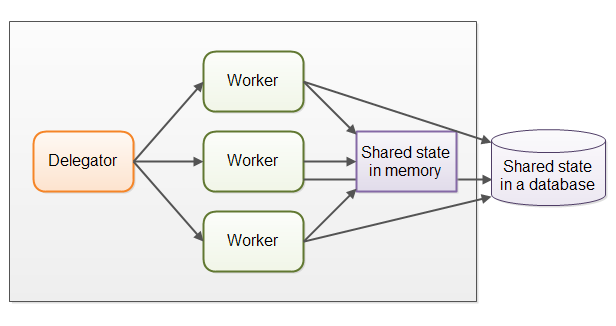

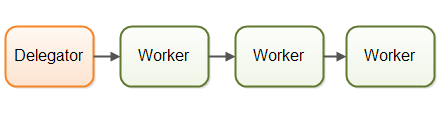

In the parallel workers concurrency model, incoming jobs are assigned to different workers. A delegator distributes the incoming jobs to different workers. Each worker completes the full job. The workers work in parallel, running in different threads, and possibly on different CPUs.

The parallel workers concurrency model is the most commonly used concurrency model in Java applications (although that is changing). Many of the concurrency utilities in the java.util.concurrent package are designed for use with this model. The parallel workers concurrency model can be designed to use both shared state or separate state, meaning the workers either has access to some shared state, or they have no shared state.

### Parallel Workers Model Advantages

The parallel workers concurrency model is easy to understand. To increase the parallelization level of the application you just add more workers.

### Parallel Workers Model Disadvantages

#### Shared State Can Get Complex

In case the shared workers need access to some kind of shared data, either in memory or in shared database, managing correct concurrent access can get complex. As soon as shared state sneaks into the parallel workers concurrency model, it starts getting complicated.

* Threads need to access the shared data in a way that makes sure that changes done by one thread are visible to the others (pushed to main memory and not just stuck in the CPU cache of the CPU executing the thread)

* Threads need to avoid race conditions, deadlocks and many other shared state concurrency problems.

* Part of the parallelization is lost when threads are waiting for each other when accessing the shared data structures. Many concurrent data structures are blocking, meaning one or a limited set of threads can access them at any given time. This may lead to contention on these shared data structures. High contention will essentially lead to a degree of serialization of execution (eliminating parallelization) of the part of the code that access the shared data structures.

Modern **non-blocking concurrency algorithms** may decrease contention and increase performance, but non-blocking algorithms are hard to implement.

**Persistent data structures** are another alternative. A persistent data structure always preserves the previous version of itself when modified. Thus, if multiple threads point to the same persistent data structure and one thread modifies it, the modifying thread gets a reference to the new structure. All other threads keep a reference to the old structure which is still unchanged and thus consistent. Although persistent data structures are an elegant solution to concurrent modification of shared data structures, they tend not to perform that well.

#### Stateless Workers

Shared state can be modified by other threads in the system. Therefore, workers must reread the state every time they need it, to make sure they are working on the latest copy. This is true no matter whether the shared state is kept in memory or in an external database. A worker that does not keep state internally (but rereads it every time it is needed) is called stateless. Rereading data every time you need it can get slow. Especially if the state is stored in an external database.

#### Job Ordering Is Nondeterministic

Another disadvantage of the parallel worker model is that the job execution order is nondeterministic. The nondeterministic nature of the parallel worker model makes it hard to reason about the state of the system at any given point in time. It also makes it harder to guarantee that one task finishes before another. This does not always cause problems though. It depends on the needs of the system.

## Assembly Line Concurrency Model

This model may be called different depending on the platform / community. Some of the names associated with it include **reactive systems** and **event driven systems**.

The workers are organized like workers at an assembly line in a factory. Each worker only performs a part of the full job. When that part is finished, the worker forwards the job to the next worker.

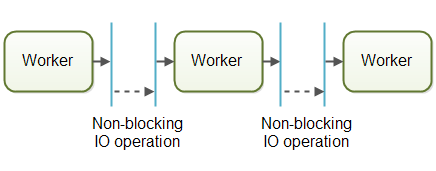

Systems using this concurrency model are usually designed to use non-blocking IO. Non-blocking IO means that when a worker starts an IO operation, (eg. reading a file or data from a network connection) the worker does not wait for the IO call to finish. IO operations are slow, therefore waiting for them to complete is a waste of CPU time. The CPU could be doing something else in the meantime. When the IO operation finishes, the result of the IO operation (e.g. data read or status of data written) is passed on to another worker.

With non-blocking IO, the IO operations determine the boundary between workers. A worker does as much as it can until it has to start an IO operation. Then it gives up control over the job. When the IO operation finishes, the next worker in the assembly line continues working on the job, until that too has to start an IO operation etc.

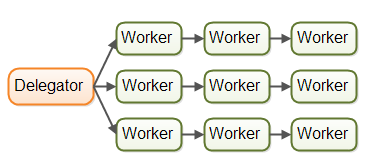

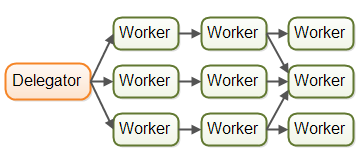

In reality, the jobs may not flow along a single assembly line. Since most systems can perform more than one job, jobs flow from worker to worker depending on what part of the job that needs to be executed next. In reality, there could be multiple different virtual assembly lines running on at the same time

Jobs may even be forwarded to more than one worker for concurrent processing. For instance, a job may be forwarded to both a job executor and a job logger.

### Reactive, Event Driven Systems

Systems using an assembly line concurrency model are also sometimes called reactive systems, or event driven systems. The system's workers react to events occurring in the system, either received from the outside world or emitted by other workers. Examples of events could be an incoming HTTP request or a certain file finished loading into memory etc.

Some of the reactive / event driven platforms available include

* Vert.x

* Akka

### Actors VS Channels

Actors and channels are two similar examples of assembly line (or reactive / event driven) models

#### Actors

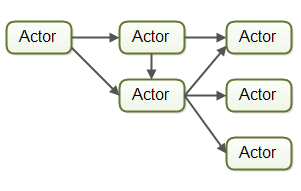

In the actors model each worker is called an actor. Actors can send messages directly to each other. Messages are sent and processed asynchronously. Actors can be used to implement one or more job processing assembly lines, as described earlier

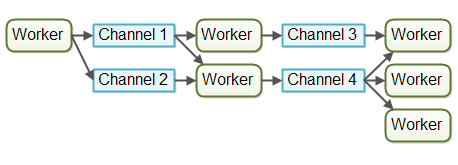

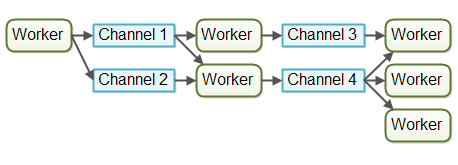

#### Channels

In the channel model, workers do not communicate directly with each other. Instead, they publish their messages (events) on different channels. Other workers can then listen for messages on these channels without the sender knowing who is listening.

The channel models seems more flexible. A worker does not need to know about what workers will process the job later in the assembly line. It just needs to know what channel to forward the job to. Listeners on channels can subscribe and unsubscribe without affecting the workers writing to the channels. This allows for a somewhat looser coupling between workers.

### Assembly Line Advantages

#### No Shared State

Workers do not share state with each other, therefore they can be implemented without having to think about all the concurrency problems that may arise from concurrent access to shared state. This makes it much easier to implement workers. You implement a worker as if it was the only thread performing that work - essentially a single-threaded implementation.

#### Stateful Workers

Workers do not share state, therefore they can be stateful. Therefore, they can keep the data they need to operate in memory, only writing changes back to the eventual external storage systems. A stateful worker can therefore often be faster than a stateless worker.

#### Better Hardware Conformity

Single-threaded code has the advantage that it often conforms better with how the underlying hardware works. It's possible to create more optimized data structures and algorithms when you can assume the code is executed in a single threaded mode.

Furthermore, single-threaded stateful workers can cache data in memory as mentioned above. When data is cached in memory, there is a high probability that this data is also cached in the CPU cache of the CPU executing the thread. This makes accessing cached data even faster.

Hardware conformity means that the code is written in a way that naturally benefits from how the underlying hardware works.

#### Job Ordering Is Possible

It is possible to implement a concurrent system according to the assembly line concurrency model in a way that guarantees job ordering. Job ordering makes it easier to reason about the state of a system at any given point in time. Furthermore, you could write all incoming jobs to a log. This log could then be used to build the state of the system from scratch in case any part of the system fails. The jobs are written to the log in a certain order, and this order becomes the guaranteed job order. Here is how such a design could look:

r.

Implementing a guaranteed job order is not necessarily easy, but it is often possible. If you can, it greatly simplifies tasks like backup, restoring data, replicating data etc. as this can all be done via the log file(s).

### Assembly Line Disadvantages

The main disadvantage of the assembly line concurrency model is that the execution of a job is often spread out over multiple workers, and thus over multiple classes in your project. Thus it becomes harder to see exactly what code is being executed for a given job.

The code becomes harder to code. Worker code is sometimes written as callback handlers, which can result in what some developers call callback hell when too many callbacks are used. Callback hell simply means that it gets hard to track what the code is really doing across all the callbacks, as well as making sure that each callback has access to the data it needs.

Parallel worker concurrency model tends to make it easier to track what the code is doing. You can open the worker code and read the code executed pretty much from start to finish. Of course parallel worker code may also be spread over many different classes, but the execution sequence is often easier to read from the code.

## Which Concurrency Model Is Best ?

The answer is that it depends on what your system is supposed to do. If your jobs are naturally parallel, independent, and with no shared state necessary, you might be able to implement your system using the parallel worker model.

Many Jobs are not naturally parallel and independent though. For these kinds of systems, the assembly line concurrency model has more advantages than disadvantages, and more advantages than the parallel worker model.

You don't even have to code all that assembly line infrastructure yourself. Modern platforms like Vert.x have implemented a lot of that for you.